The Open Source Initiative (OSI) has condemned the US technology company Meta for falsely declaring its Llama family AI models as "open-source." Stefano Maffulli, head of the OSI, warns that Meta's actions undermine the credibility of the open-source term and confuse users.

Meta argues that the Llama models are openly accessible through more than 400 million downloads. However, according to Maffulli and the OSI, these models are not fully open-source, as essential components such as training algorithms and the underlying software are not disclosed. These limitations hinder the kind of customization and experimentation that characterize open-source software.

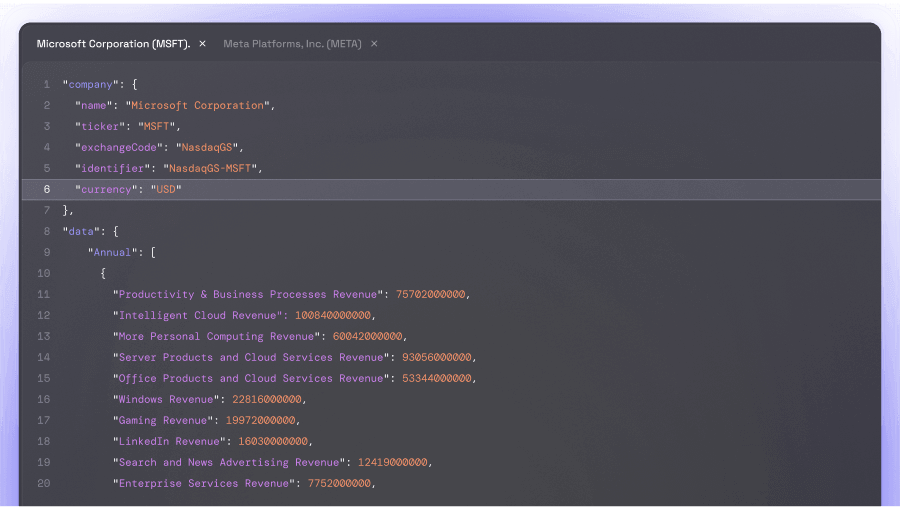

In an interview with the Financial Times, Maffulli stated that Meta's use of the term "open-source" is particularly harmful as institutions like the European Commission aim to promote genuine open-source technologies that operate independently of individual companies. He emphasized that other tech giants such as Google and Microsoft had already stopped labeling their AI models as open-source once it became clear that they did not meet the strict criteria.

Meta defended itself by stating that the existing open-source definitions for software do not meet the complex requirements of modern AI models. The company emphasized that Llama is a cornerstone of global AI innovation and that it is working with the industry on new definitions to ensure safety and responsibility within the AI community.

The OSI, however, emphasizes that the improper use of the term "open-source" could, in the long run, impede the development of AI technologies controlled by users rather than by a few large tech corporations. Ali Farhadi, head of the Allen Institute for AI, criticized Meta for not allowing developers full insights into the models and thus preventing them from building their own products on them.

With the forthcoming release of the official definitions of "open-source AI," the OSI demands that, in addition to the model weights, the training algorithms and software used must be disclosed. Additionally, companies should release the data on which their models were trained, provided that privacy and legal regulations permit this.

Maffulli continues to warn that companies like Meta, which define the term "open-source" to their own advantage, could integrate their patented, revenue-generating technologies into EU-funded standards, undermining regulatory efforts.