Amazon pushes further into the semiconductor market with new AI chips to reduce dependencies on Nvidia and make its own cloud infrastructure more efficient. Austin-based Annapurna Labs, acquired by Amazon in 2015, developed the latest chip generation "Trainium 2," which is specifically designed for AI training and is set to be unveiled in December. Among the first testing customers are Anthropic, Databricks, and Deutsche Telekom.

The Goal: To Offer Alternatives to Nvidia. "We want to be the best platform for Nvidia, but it is healthy to have an alternative," said Dave Brown, Vice President of Compute at AWS. Amazon emphasizes that its own AI chips – including "Inferentia" – are already 40 percent cheaper than Nvidia's products.

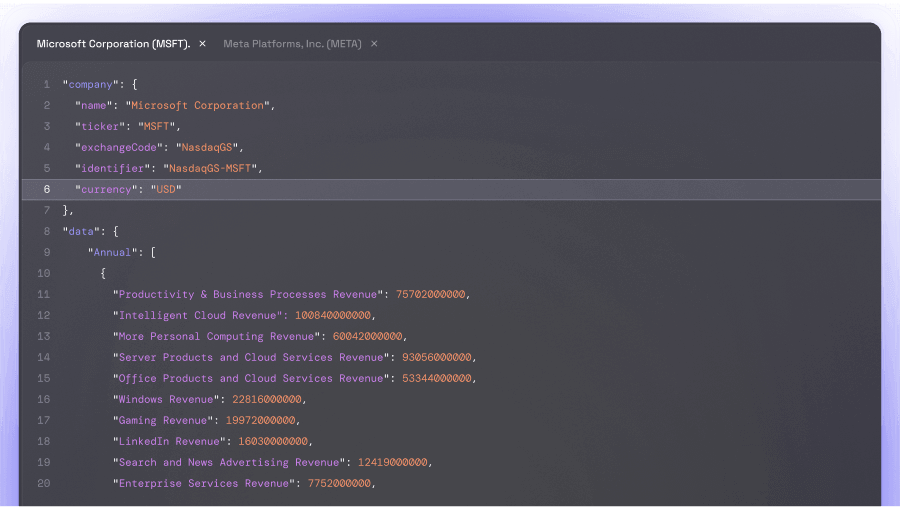

Amazon's Investments in Infrastructure and Technology Drastically Increase: By 2024 They Are Expected to Reach Around 75 Billion Dollars, Significantly More Than the 48.4 Billion From the Previous Year. This Strategic Development Is Also Reflected in Other Tech Giants like Microsoft and Google, Who Are Also Investing in Their Own Chips to Meet the Increasing Demands of the AI Revolution.

Every cloud provider is striving for vertical integration," says Daniel Newman of the Futurum Group. Through in-house chip development, companies like Amazon and Meta secure cost advantages and more control.

The push against Nvidia, however, shows only limited impact so far. Nvidia generated $26.3 billion in revenue from AI data center chips in the last quarter – an amount equivalent to Amazon's entire AWS division during the same period.